Introduction to scheduling

If only one CPU is available, while two or more processes are simultaneously in the Ready state, the scheduler has to determine which process to run next.

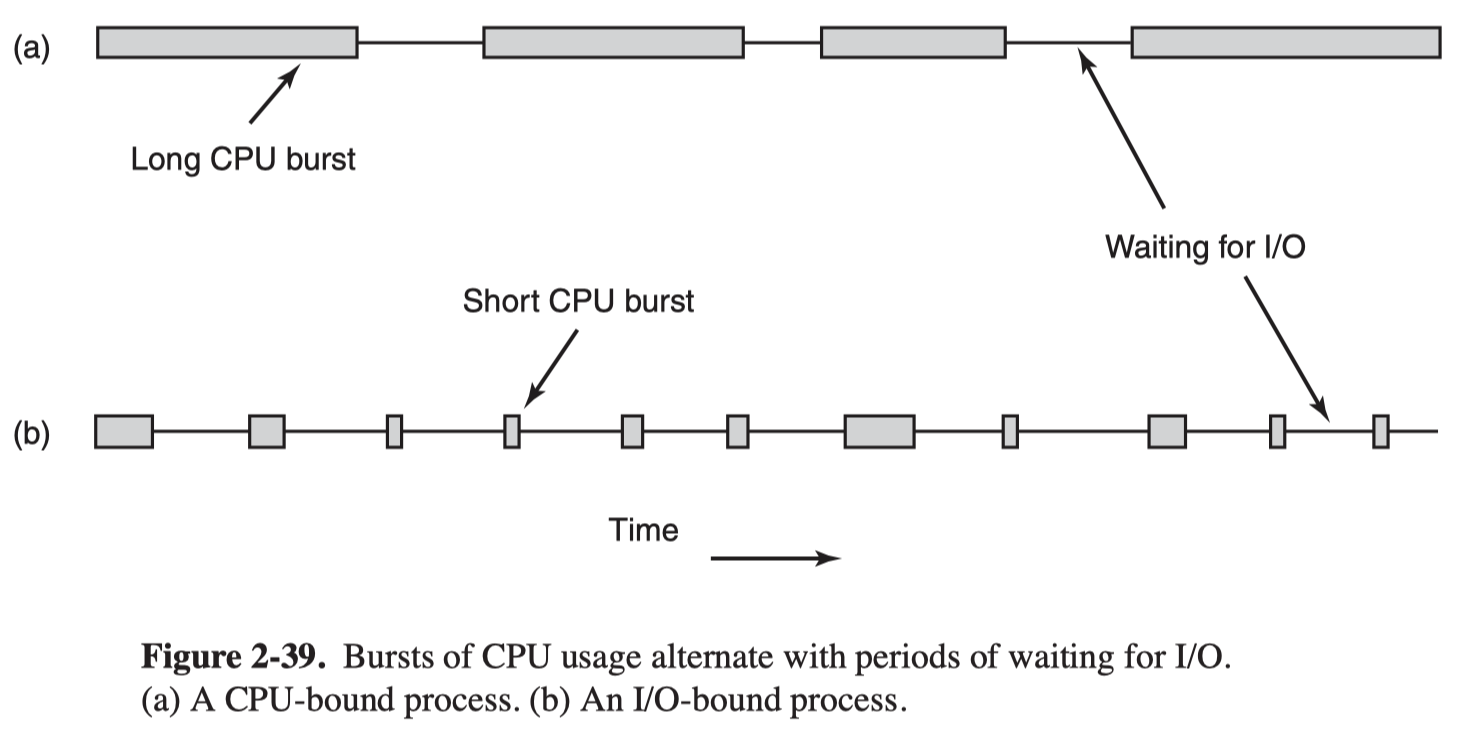

CPU vs I/O Bound

Some process spend most of their time computing, while others spend most of that waiting for I/O. The former are called CPU-bound; the latter are called IO/bound.

When to Schedule

A key issue related to scheduling is when to make scheduling decisions. There are a variety of situations in which scheduling is needed:

- When a new process is created, a decision needs to be made whether to run the parent process or the child process.

- When a process exits, some other process must be chose from the set of ready processes.

- When a process blocks, another process has to be selected to run.

- When an I/O interrupt occurs, the scheduler need to decide whether to run the newly ready process

Categories of Scheduling Algorithms

Nonpreemptive vs Preemptive

Scheduling algorithm can be divided into two categories with respect to how they deal with clock interrupt.

A nonpreemptive scheduling algorithm picks a process to run and then just lets it run until it blocks or voluntarily releases the CPU.

A preemptive scheduling algorithm pricks a process and lets it run for a maximum of some fixed time. If it is still running at the end of the time interval, it is suspended and the scheduler picks another process to run.

Batch, Interactive, Real-time

In different environments different scheduling algorithms are needed. Three environment worth distinguishing are:

- Batch

- In batch system, there are no users impatiently waiting for a quick response

- Nonpreemptive algorithms, or preemptive algorithms with long time periods for each process, are all acceptable.

- Interactive

- With interactive users, preemption is essential to keep one process from hogging the CPU and denying service to the others

- Real time

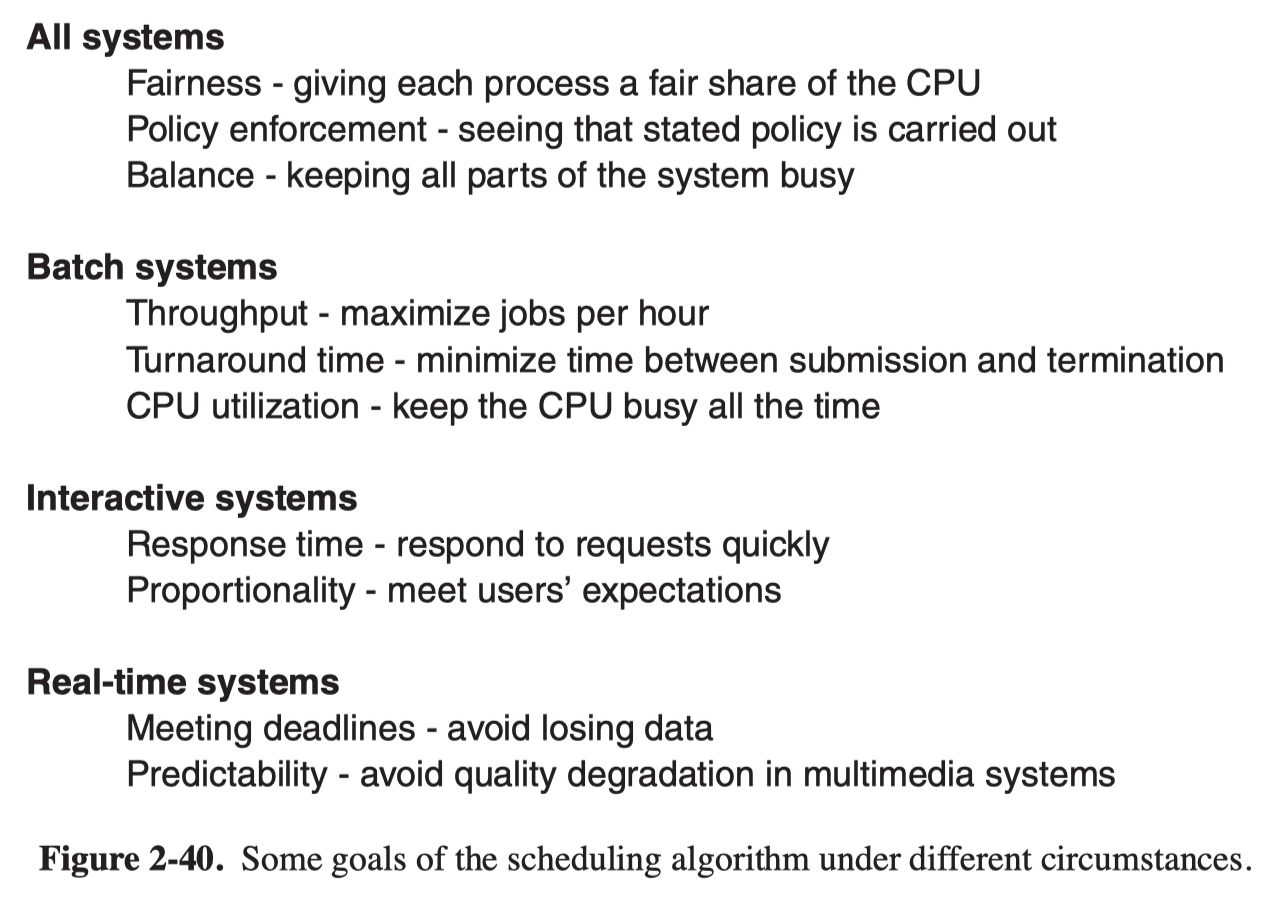

There are some goals when designing a scheduling algorithm.

Scheduling in Batch Systems

First-Come, First Served

With this nonpreemptive algorithm, process are assigned the CPU in order they request it.

- There is a single queue of ready processes

- New processes are put on the end of the queue

- When the running process blocks, the first process on the queue runs next

- When a blocked process becomes ready, it is put on the end of the queue.

Shortest Job First

This nonpreemptive algorithm assumes that the run time are known in advance. When several jobs are waiting to be started, the scheduler picks the shortest job first. It is optimal only when all the jobs are available simultaneously.

Shortest Remaining Time Next

This algorithm is a preemptive version of shortest job first:

- The scheduler always chooses the process whose remaining run time is the shortest.

- When a new job arrives, its total time is compared to the current process’s remaining time. If less, the current process is suspended and the new job started.

Scheduling in Interactive System

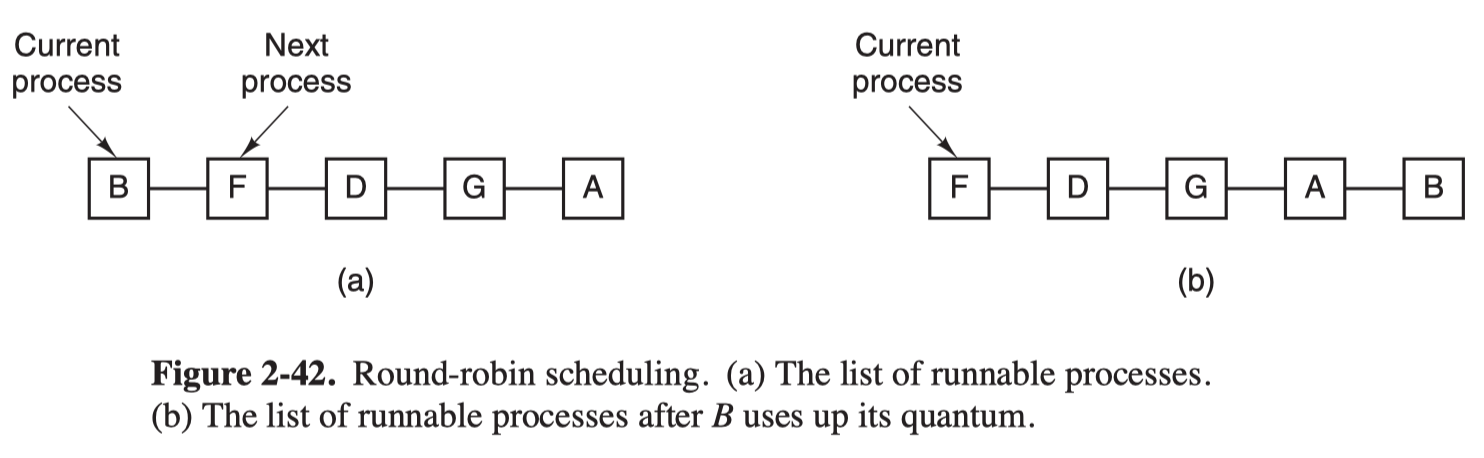

Round-Robin Scheduling

- Each process is assigned a time interval called quantum.

- If the process is still running at the end of the quantum, the CPU is preempted and given to another process.

- The scheduler need to maintain a list of runnable processes.

An interesting issue is the length of the quantum. Setting the quantum too short causes too many context switches and lowers the CPU efficiency, while setting it too long may cause poor response to short interactive requests. If the quantum is set longer than the mean CPU burst, preemption will not often happen.

Priority Scheduling

Each process is assigned a priority, and the runnable process with the highest priority is allowed to run.

To prevent high-priority processes from running forever:

- the scheduler may decrease the priority of the currently running process at each clock interrupt

- Each process may be assigned a maximum time quantum, when it is used up, the next-highest-priority process is given a chance to run.

Priorities can be assigned to processes statically or dynamically.

It’s wise to set a higher-priority to I/O bound tasks. Whenever such a process wants CPU, it should be given the CPU immediately, to let it start its next I/O request, which can then proceed in parallel with another process actually computing.

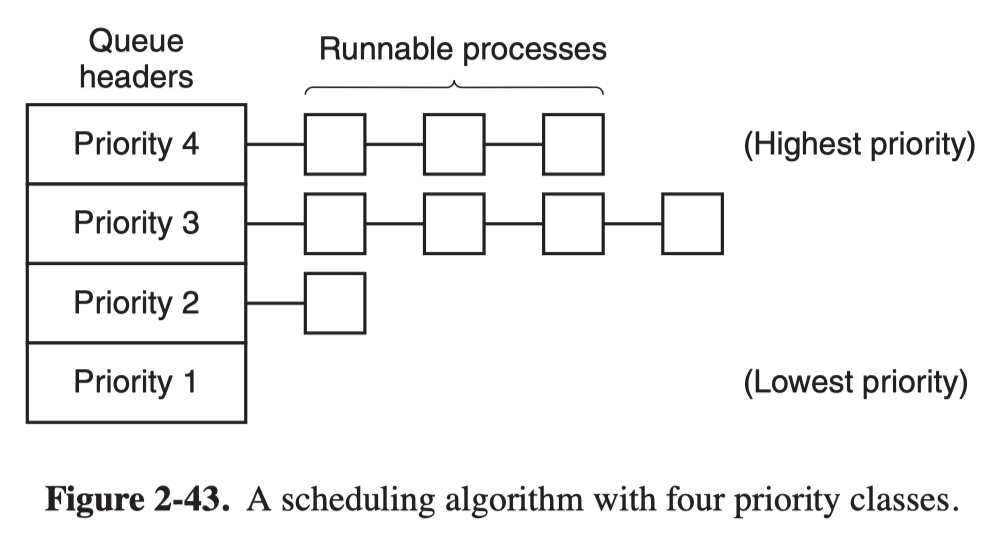

Multiple Queues

It is often convenient to group processes into priority classes and use priority scheduling among the classes but round-robin scheduling within each class.

- When there are runnable processes in higher-priority classes, just run each one in round-robin fashion and never bother with lower-priority classes.

- When high-priority classes are empty, run lower class round-robin, and so on.

- If the priorities are not adjusted occasionally, lower-priority classes may starve to death.

Multi-Level Feedback Queues

Each process is assgined two priority:

- static priority: non-changing unless requested through system call

- dynamic priority: lies in $[0, static_priority - 1]$, changes constantly based on current state of the process

Scheduling is done on a preemptive basis, and a dynamic priority mechanism is used.

- When a process has be preempted, decrement its dynamic priority;

When it reaches $-1$, reset it to $static_priority - 1$.

Lottery Scheduling

The basic idea is to give processes lottery tickets for various system resources, such as CPU time.

- When a scheduling decision is made, a lottery ticker is chosen at random, and the process holding that ticket gets the resource.

- More important processes can be given extra tickets, to increase their odds of winning.

- Cooperating processes may exchange tickets if they wish.

Fair-Share Scheduling

This model takes into account which user owns a process before scheduling it. In this model, each user is allocated some fraction of the CPU and the scheduler picks processes in such a way as to enforce it.

Scheduling in Real-Time Systems

Real-time systems are generally categorized as:

- Hard real time: there are absolute deadlines that must be met

- Soft real time: missing an occasional dealine is tolerable

The events that a real-time system responds to can be categorized as:

- Periodic: occur at regular intervals

- Aperiodic: occur unpredictably

If there are $m$ periodic events and event $i$ occurs with period $P_i$ and requires $C_i$ seconds of CPU time to handle each event, then the load can be handled only if

$$

\sum_{i=1}^m \frac{C_i}{P_i} \leq 1

$$

A real-time that meets this criterion is said to be schedulable.