Processes

The Process Model

A process is an instance of an executing program, including the current values of the:

- Program counter: points at current instruction

- Registers

- Variables

A process is an activity with its program, input, output and a state. If a program is running twice, it counts as two processes.

Process Creation

Four principal events cause processes to be created.

- System initialization: when an operating system is booted, two kinds of processes are created.

- Foreground processes that interact with users

- Background processes (daemons) that handle activities like email, Web pages

- Execution of a process-creation system call by a running process: often a running process will issue system calls to create one or more new processes to help it do its job

- A user request to create a new process: in interactive systems, users can start a program by typing a command or clicking on an icon.

- Initiation of a batch job

Process Termination

Processes will terminate usually due to one of the following conditions:

- Normal exit (voluntary)

- Error exit (voluntary)

- Fatal error (involuntary)

- Killed by another process (involuntary)

Implementation of Processes

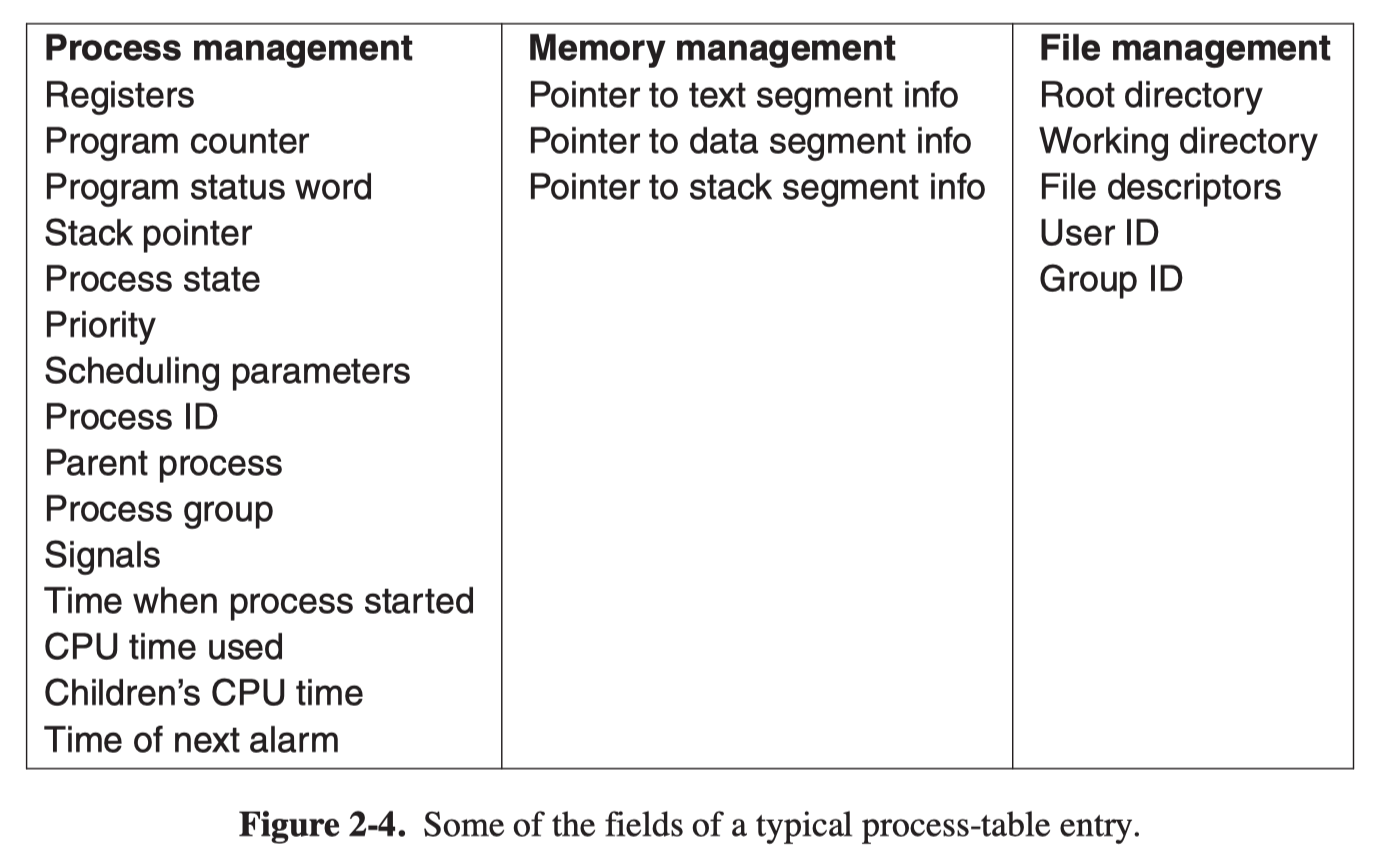

The operating system maintains a table (an array of structures), called the process table, with one entry (process control block) per process, which contains important information about the process’s state.

Process State Models

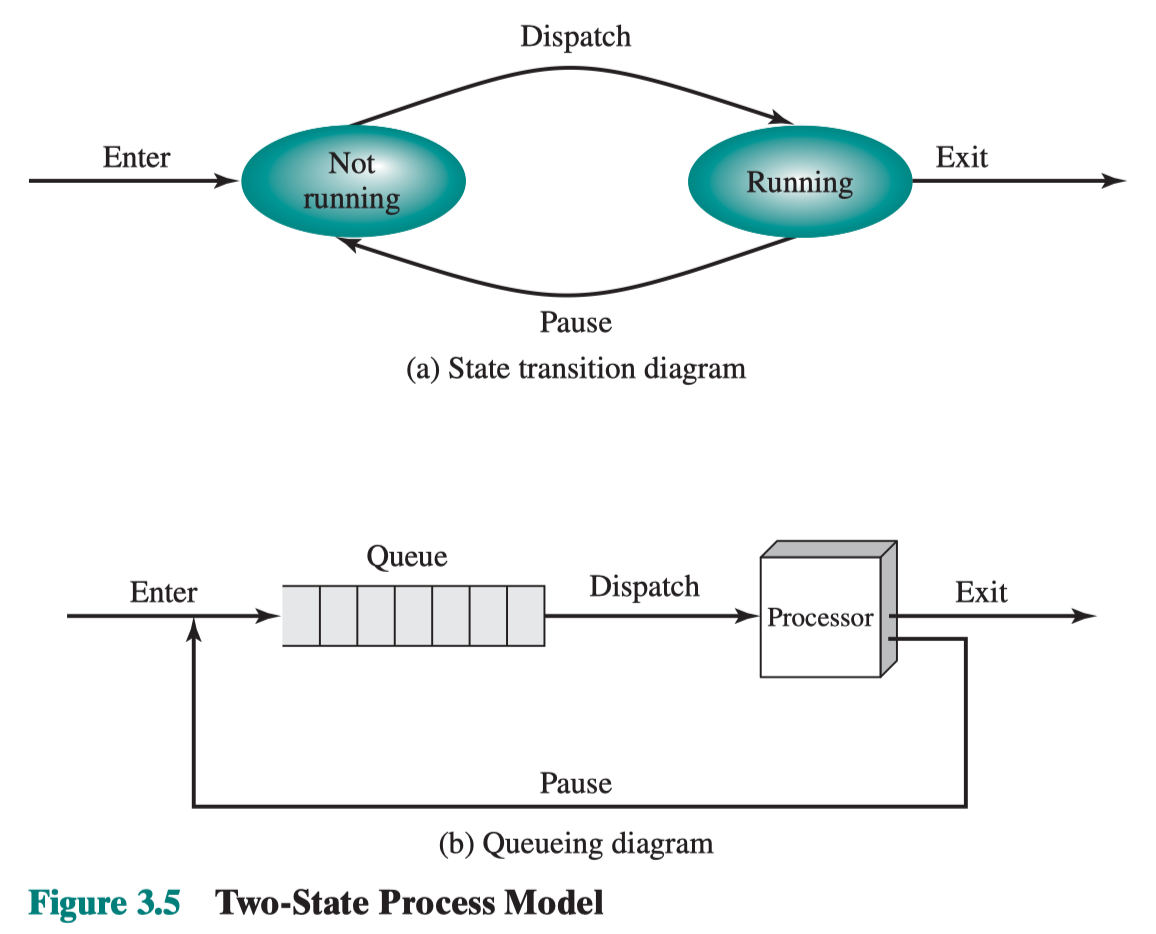

A Two-State Process Model

In this model, a process may be in one of two states: Running or Not Running.

- When the OS creates a new process, it creates a process control block for the process and enter the process into the system into the Not Running state.

- From time to time, the currently running process will be interrupted, and the dispatcher will select some other process to run.

- The former process moves from the Running state to the Not Running state, and one of the other processes moves to the Running state.

Process not running must be kept in a queue, waiting their turn to execute.

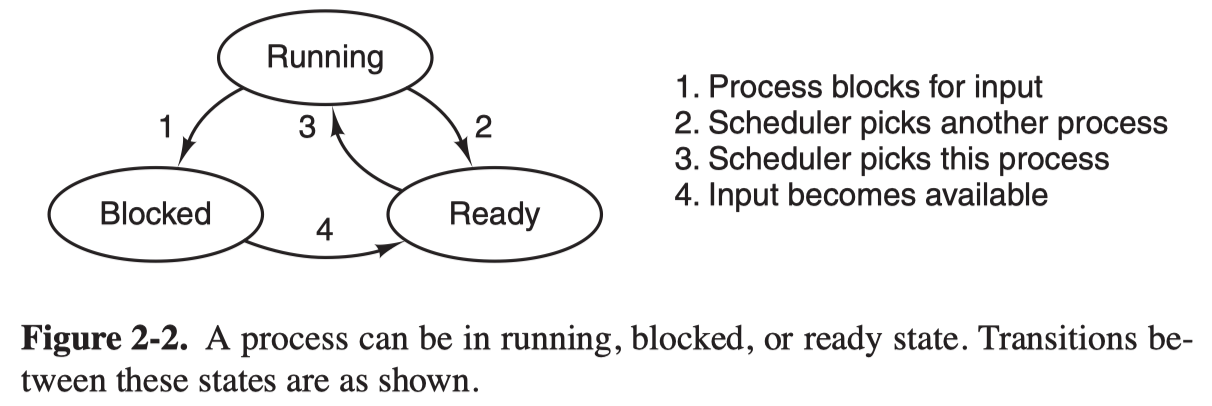

A Three-State Process Model

In this model, a process may be in three states:

- Running (actually using the CPU) at that instant

- Ready (runnable; temporarily stopped to let another process run)

- Blocked (unable to run until some externel event happens)

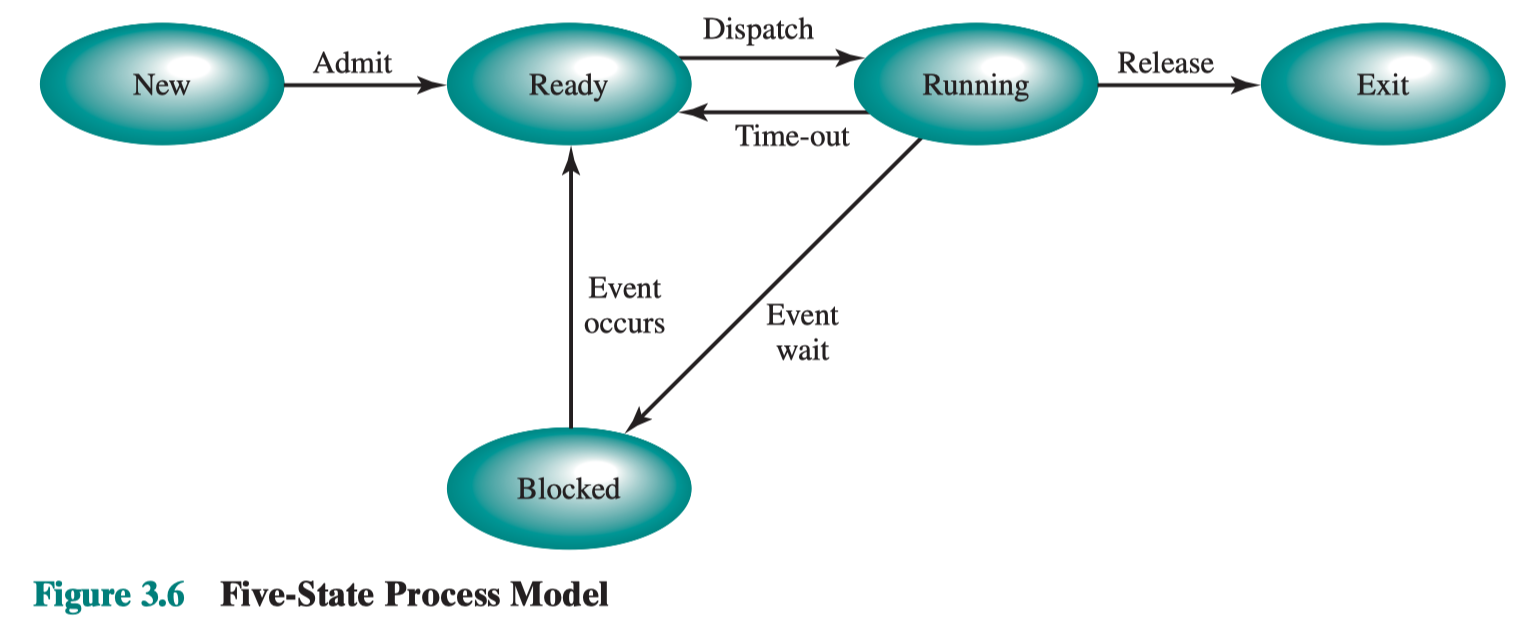

A Five-State Process Model

In this model, we add the New and Exit states:

- New: created but has not yet been admitted to the pool of executable processes. For example, although the process control block has been created, the new process may not yet has been loaded into main memory.

- Exit: released from the pool of executable processes because it halted or aborted.

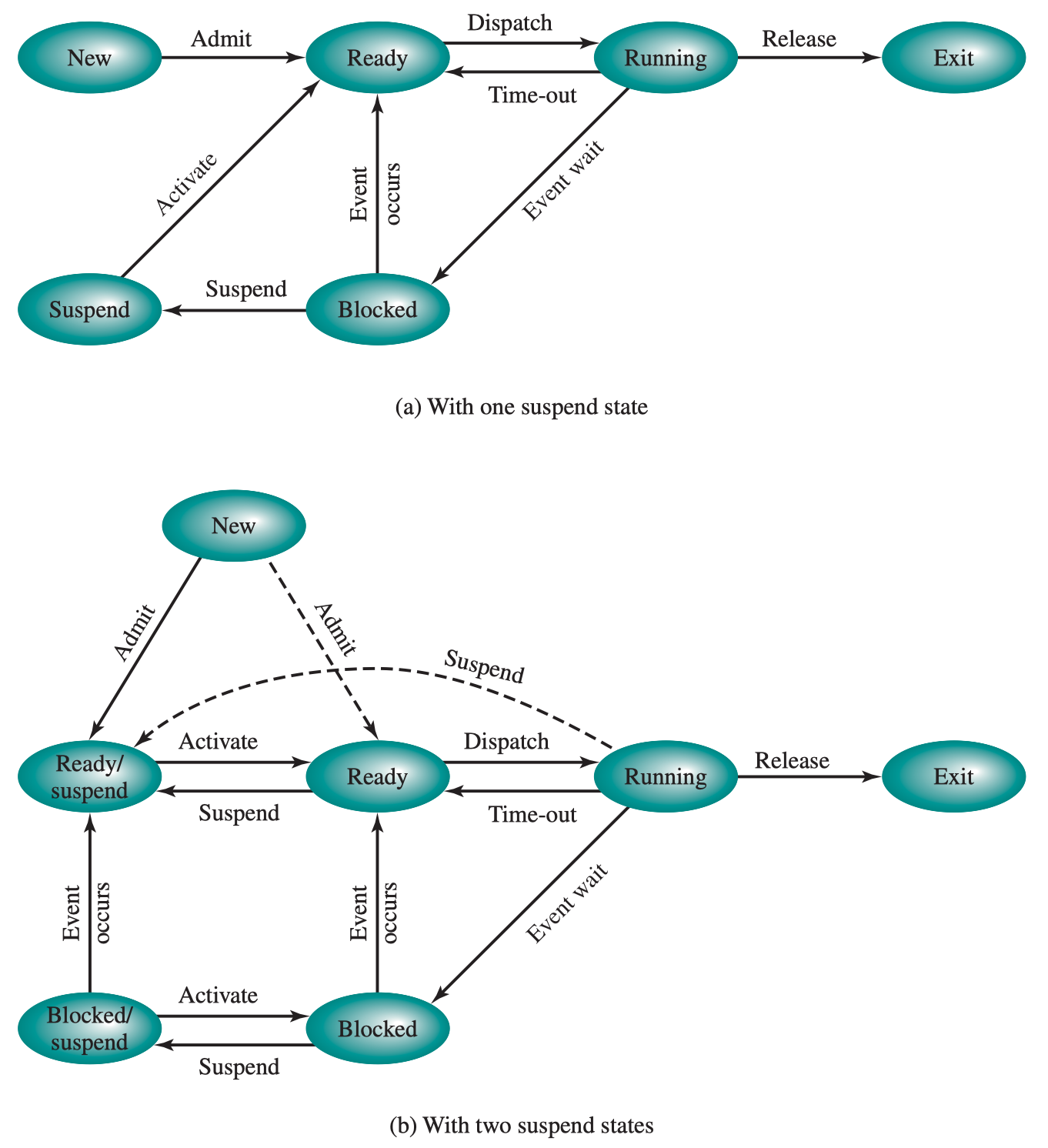

With suspened states

When none of the processes in main memory is in the Ready queue:

- The OS swaps one of the blocked processes out to disk into a suspend queue. This is a queue of existing processes that have been temporarily kicked out of main memory, or suspended.

- Then the OS brings in another process from the suspended queue, or it admits a newly created process.

With the use of swapping, the Suspend state must be added to this model.

However, there still exists one problem: all of the processes in the Supsend state were in the Blocked state at the time of suspension, and there is no good to bring a blocked process back into main memory.

Therefore, we need to distinguish the two independent concepts: whether a process is waiting on an event (blocked or not) and whether a process has been swapped out of main memory (suspended or not).

To accommodate this $2\times 2$ combination, we need four states:

- Ready: in main memory and runnable

- Blocked: in main memory but not runnable

- Blocked/Suspend: in secondary memory and waiting on an event

- Ready/Suspend: in secondary memory but ready for execution

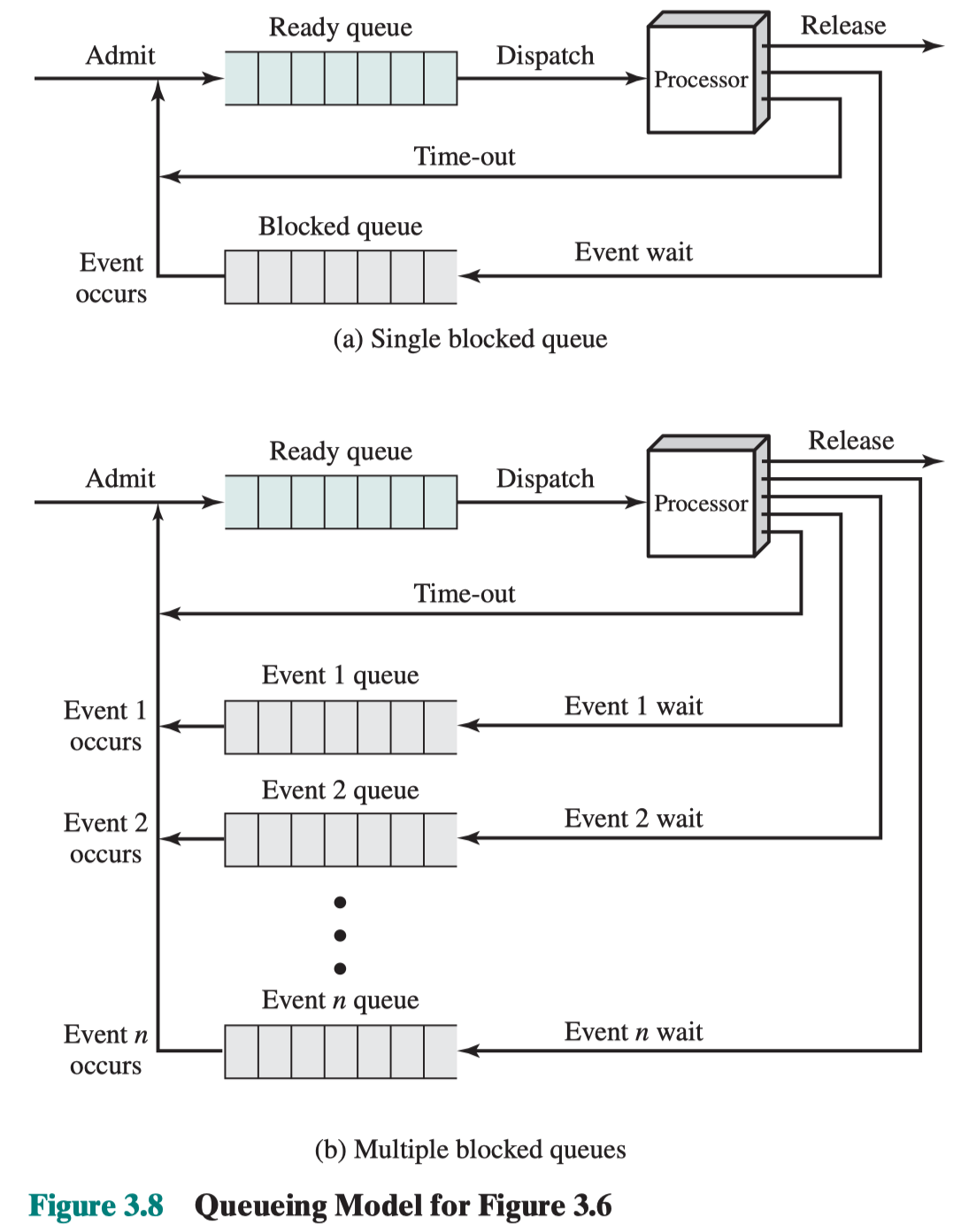

Using queues to Mange Processes

In the two-state model, we maintain process in the Not Running state in a single queue. However, this implementation is inadquate: some processes in the Not Running state are ready to execute while others are blocked. Thus, with a single queue, the dispatcher would have to scan the queue looking for a ready one.

We can manage the processes with two queues: a Ready queue and a Blocked queue.

- As each process is admitted to the system, it is placed in the Ready queue.

- The dispatcher select one process to run from the Ready queue.

- A running process removed from execution but not yet finish can be placed in the Ready queue or Blocked queue.

- When an event occurs, any process in the Blocked queue waiting on that event only is moved to the Ready queue.

We can also use multiple Blocked queues, one for each event.

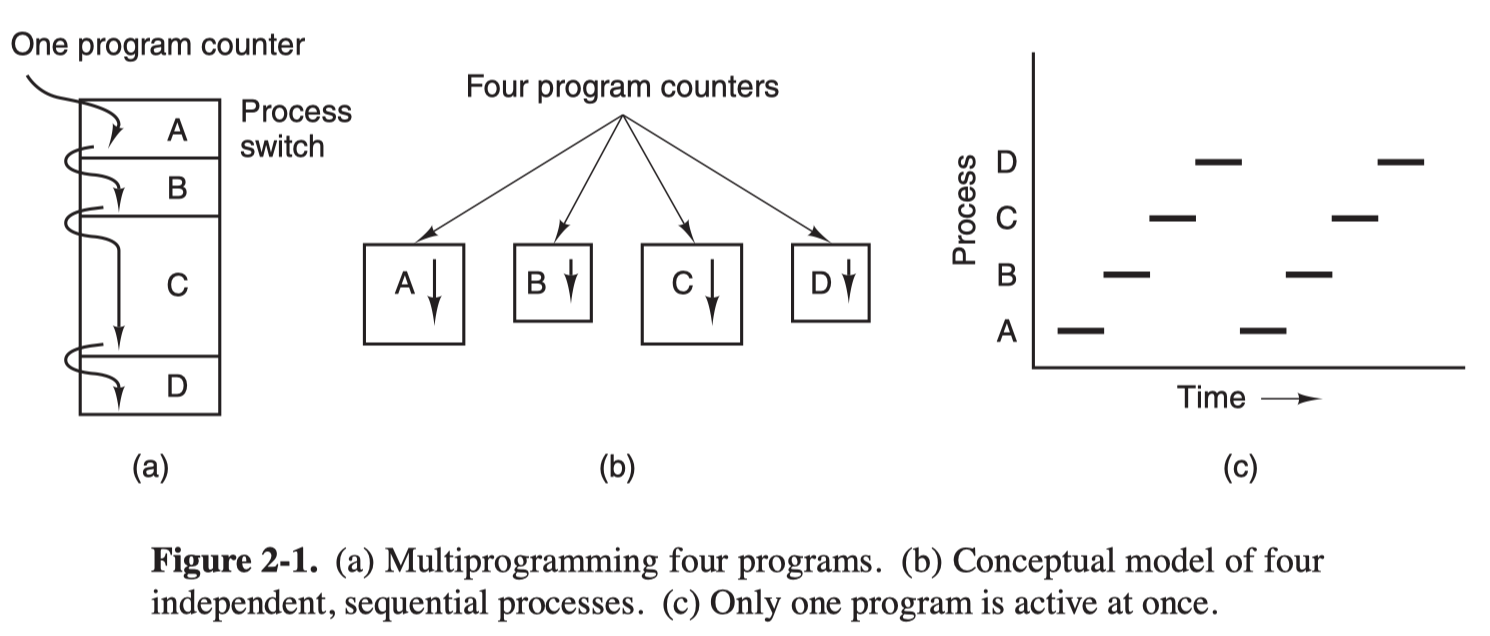

Multiprogramming

Conceptually, each process has its own virtual CPU. In reality, the real CPU switched back and forth from process to process. This rapid switching is call multiprogramming. At any given time, only one process is actually running.

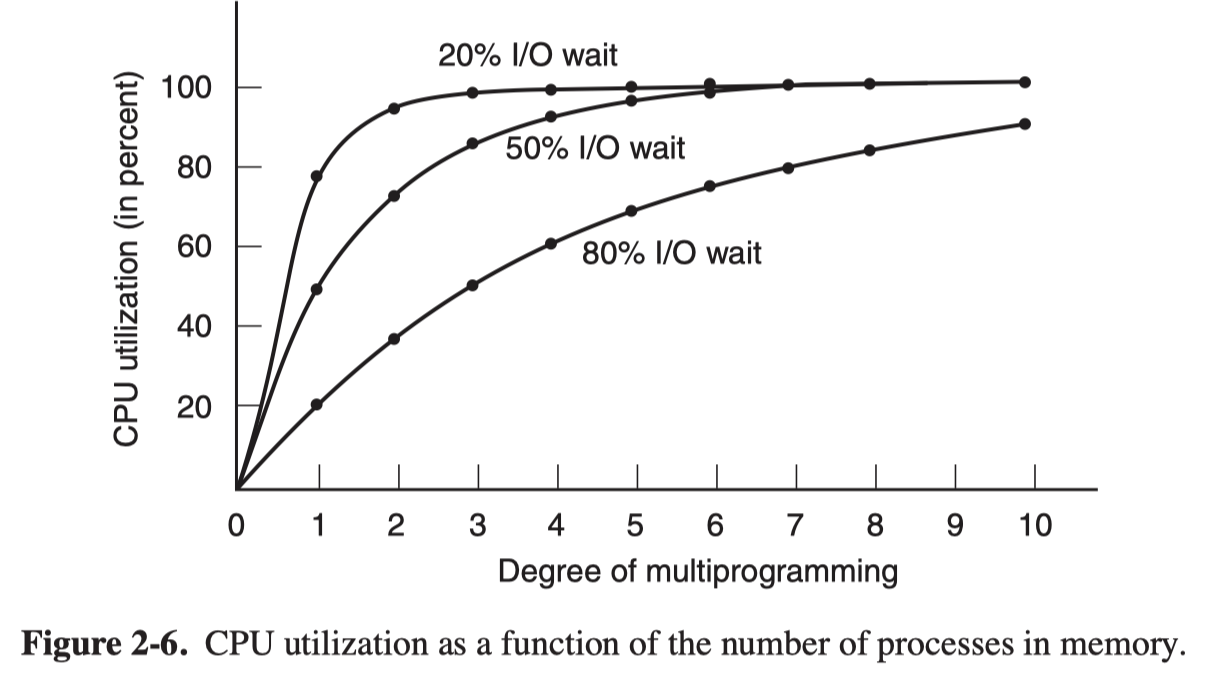

Suppose that a process spends a fraction $p$ of its time waiting for I/O to complete. With $n$ rpocesses in memory at once, the CPU utilization = $1- p^n$, which is called the degree of multiprogramming.

Threads

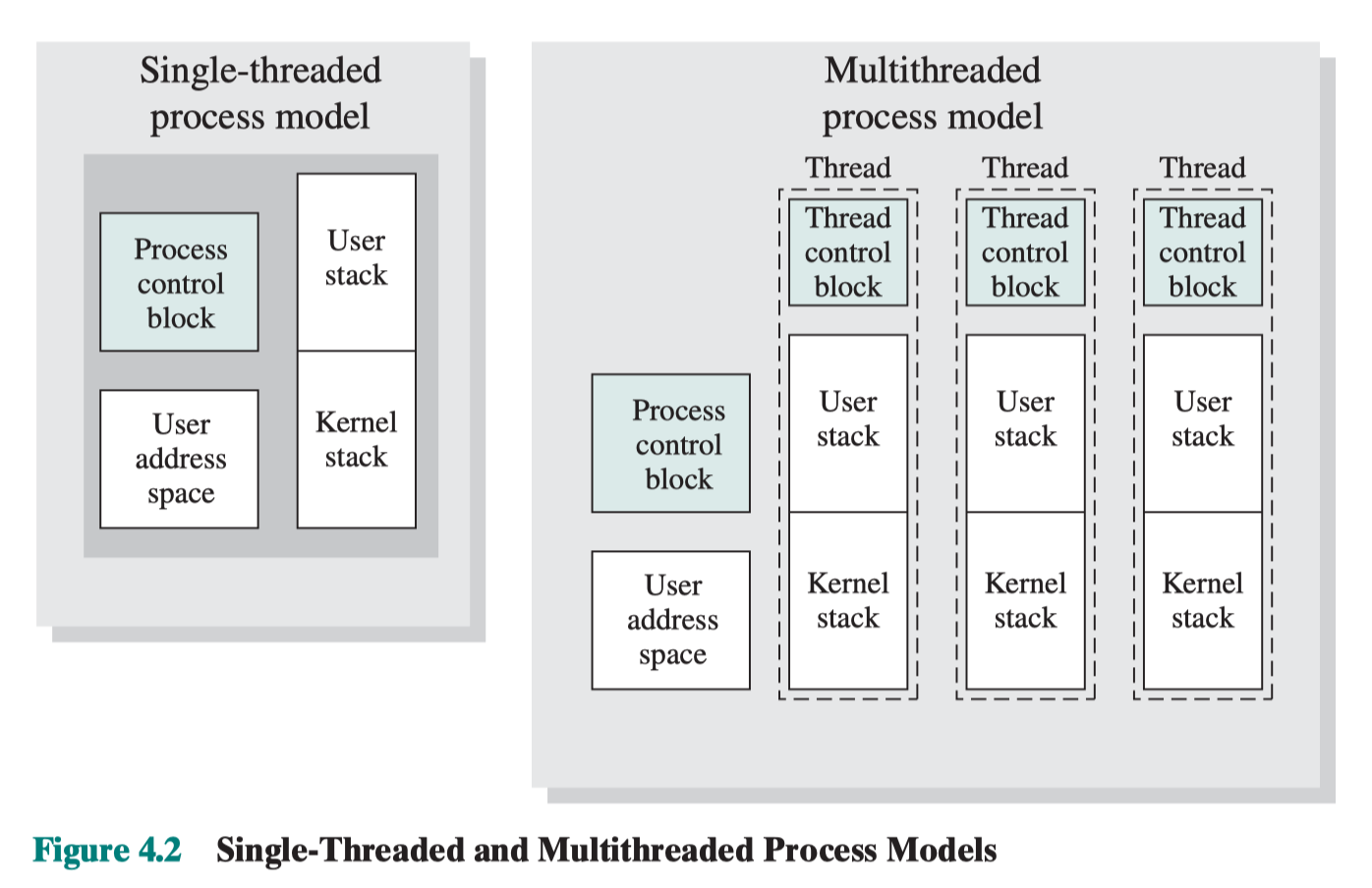

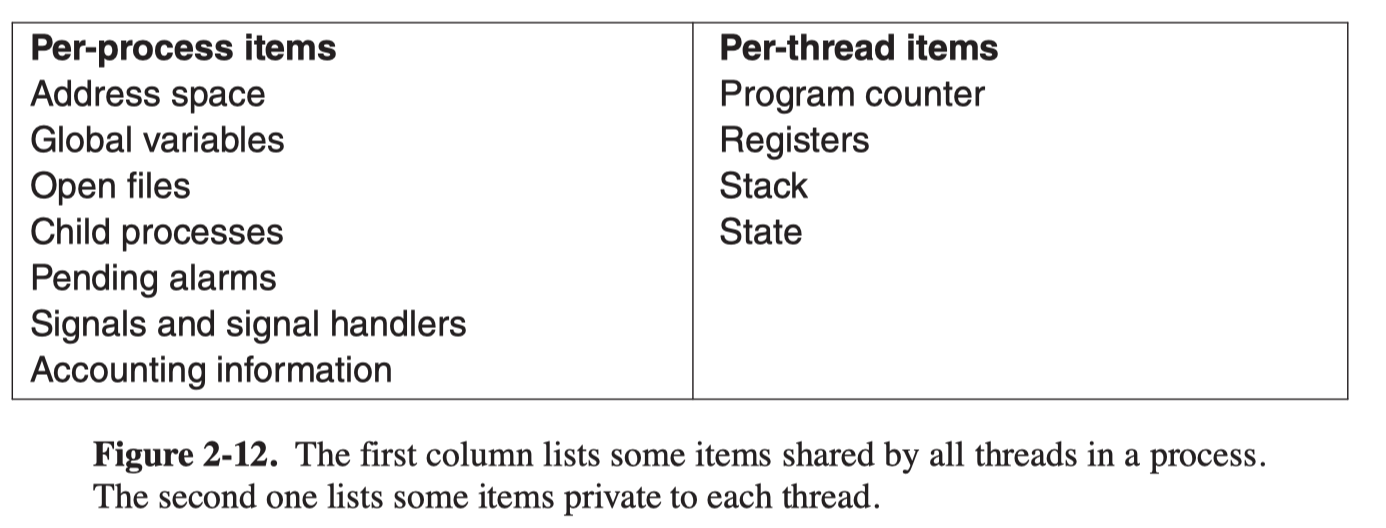

All threads of a process share the same address space and resources (except for stack).

There are several reasons for having threads:

- In many applications, multiple activities are going on at once. With threads, programming becomes simpler. It also allows activities to overlap, thus speeding up the application.

- Threads are lighter weight than processes, faster to create and destroy.

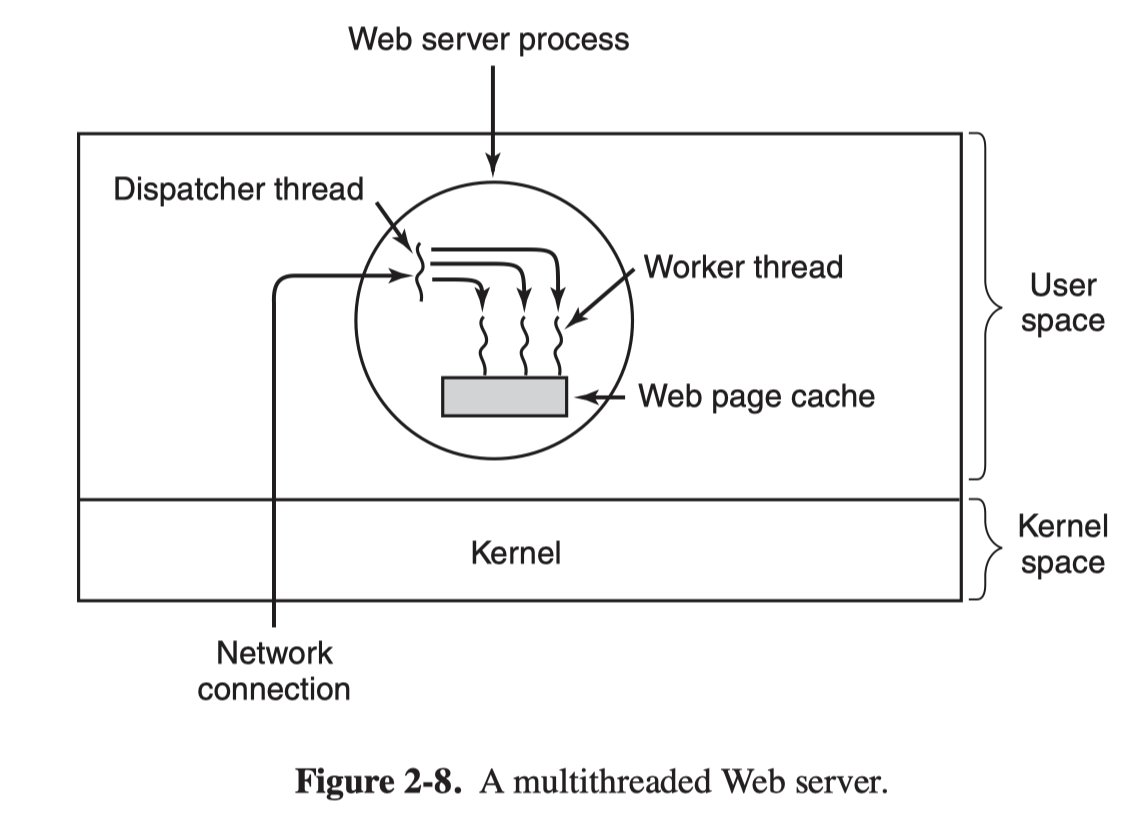

Now consider an example of a web server.

- The dispatcher thread reads incoming requests from the network.

- After examining the request, it wakes up an blocked worker thread and hands it the request.

- When the worker wakes up, it checks to see if the request can be satisfied from the Web page cache. If not, it read from the disk and block until the disk operation completes.

- When the worker locks, another thread is chose to run.

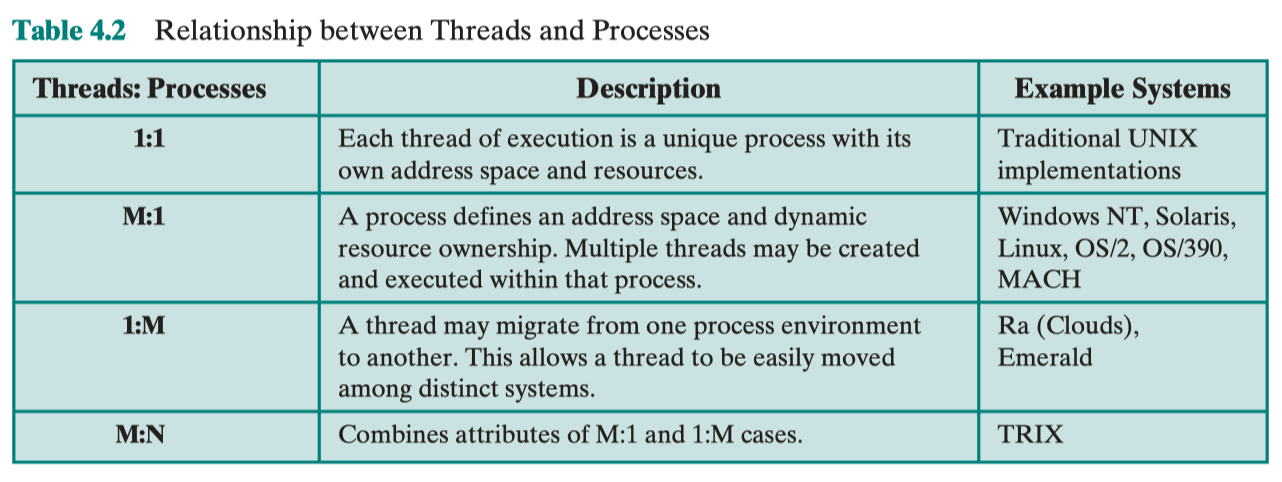

Processes & Threads

In a multithreaded environment, there is stilll a single process control block and user address space, but now there are separate stacks for each thread, as well as a separate control block containing register values, priority and other thread-realated state information. There is no protection between threads.

Process is the unit of resource management, while thread is the unit of execution.

Multithreading

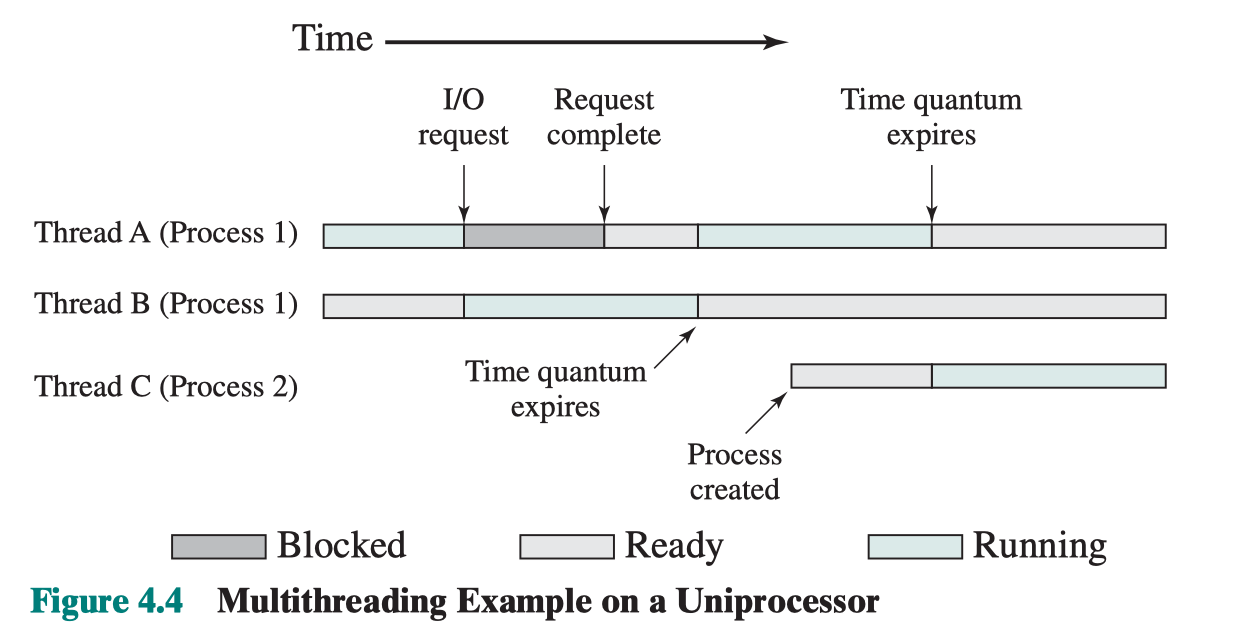

Allowing multiple threads in the same process is called multithreading. On a uniprocessor, multiprogramming enables the interlevaing of multiple threads within multiple processres.

Implementation of Threads

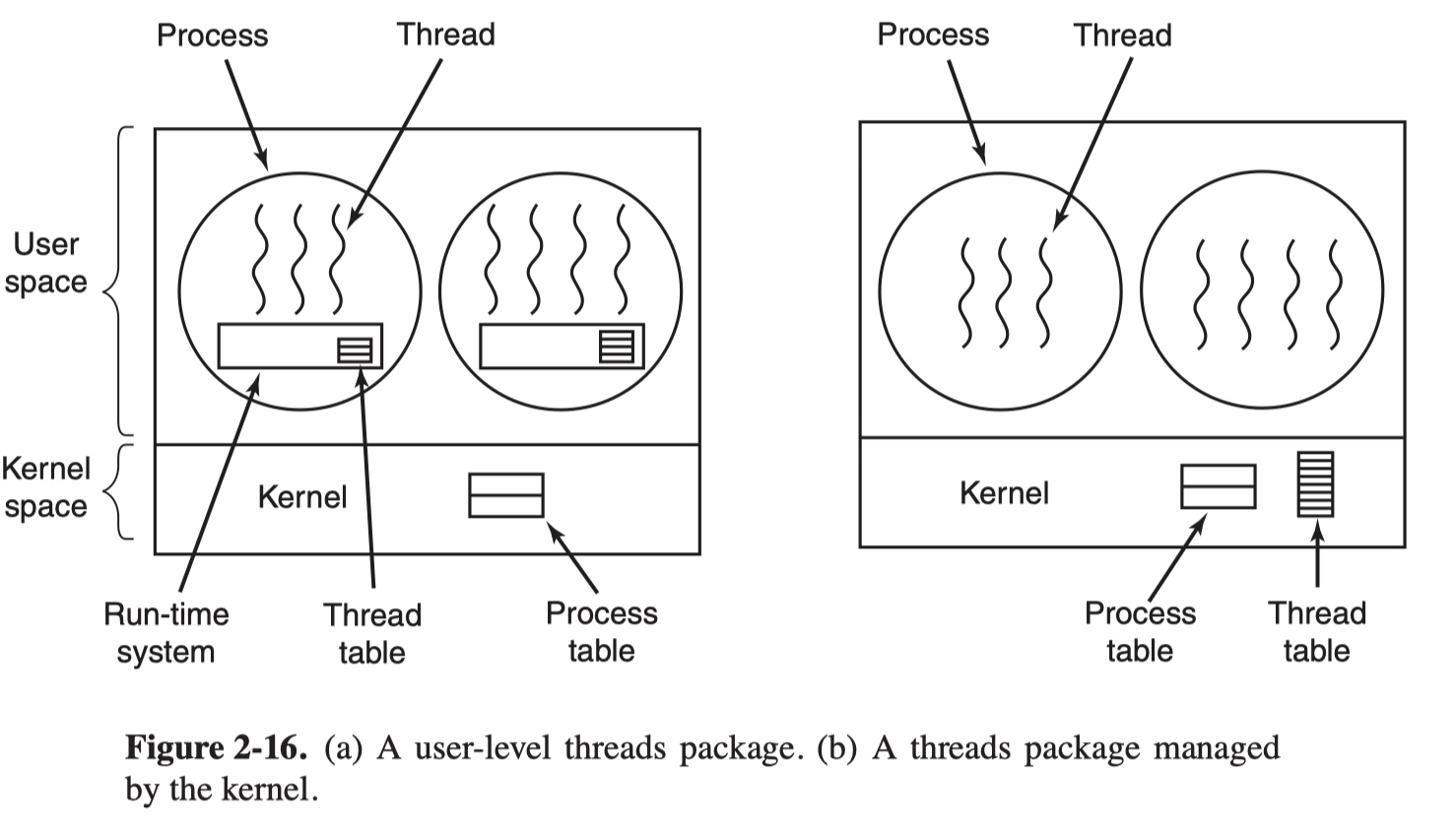

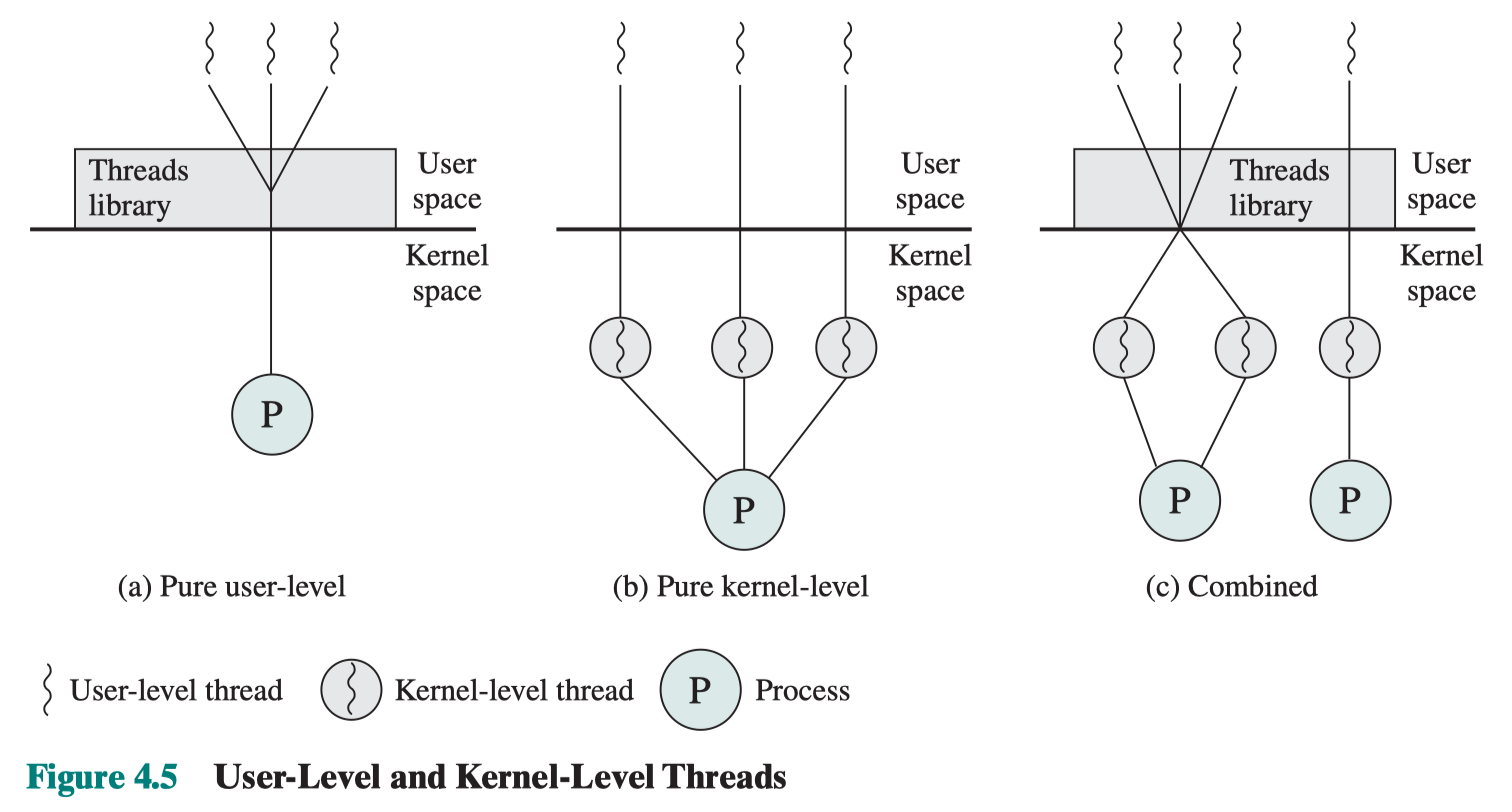

There are two main places to implement threads: user space and kernel space, and a hybrid choice is also possible.

User-Level Threads (ULTs)

In ULT, thread management is done by the application and the kernel is not aware of the existence of threads. With this approach, threads are implemented by a library.

The kernel continues to schedule the process as a unit and assigns a single execution state.

Advantages of ULT:

Thread swithcing does not require kernel mode.

Scheduling can be applicaion specific. The scheduling algorithm can be tailored to the application without distrurbing the underlying OS scheduler.

A user-level threads package can be implemented on any os, even those does not support threads.

They also scales better, since thread tables and some stacks are not in the kernel.

Disadvantages:

- When a ULT executes a blocking system call or causes a page fault all of the threads within the process are blocked.

- A multithreaded application cannnot take advantage of multiprocessing, since a kernel assigns one process to onely one processor at a time.

Kernel-Level Threads (KLTs)

In KLT, thread management is done by the kernel. No runtime system is needed in each process.

Advantages of KLT:

the kernel can simultaneously schedule multiple threads from the same process on multiple processors

if one thread is blocked, the kernel can schedule anothr thread of the same process.

kernel routines can be multithreaded

The principal disadvantage is the substantial cost of system call. When a thread wants to create a new thread or destroy an existing thread, it makes a kernel call. All calls that may blcok a thread are also implemented as system calls, at considerably greater cost than a call to a run-time systm procedure.

Hybrid Approach

In a combined system, thread creation is done completely in user space, as is the bulk of the scheduling and synchronization of threads within an application. The multiple ULTs from a single application are mapped onto some (smaller or equal) number of KLTs.